Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

AI-Driven Traffic Management System for Emergency Vehicle Prioritization Using YOLO

Authors: Lokesh Pagare, Kaivalya Pagrut, Labhesh Pahade, Suyash Pahare, Prathamesh Paigude, Sanika Pakhare

DOI Link: https://doi.org/10.22214/ijraset.2024.65461

Certificate: View Certificate

Abstract

Red signals often make ambulances face huge delays at traffic intersections, thus impeding urgent response and patient care. This paper presents a real-time, autonomous traffic control system that applies a deep learning-based object detection approach to identify ambulances stuck in traffic. The proposed system takes the YOLOv8 model in a close system collaboration with Python and Ultralytics to detect emergency vehicles accurately. In case an ambulance is seen on a busy lane, the system automatically changes the signal on that lane from red to green and clears the path for it while also turning the signals on other lanes to red to avoid further congestion. The implementation of the project utilised OpenCV, NumPy, and other Python libraries like PyAudio, Plyer, Tkinter, Matplotlib, PushBullet for data processing, alert management, and system interface. Roboflow was used to curate and annotate the training dataset to ensure high-quality input to the model. Experimental results show that the system is capable of reducing a considerable amount of delay caused by intersections for emergency vehicles, thus improving efficiency in emergency responses.

Introduction

I. INTRODUCTION

Ambulances, and other emergency vehicles, thus are very much at the mercy of the mundane traffic junctions while running and are often held up at red signals. These delays may sharply compromise response time in emergencies, endangering lives in several occasions. This paper proposes a "Real-Time Ambulance Detection and Traffic Signal Control System," aimed at the optimization of traffic in emergency situations. The proposed system will leverage CCTV cameras placed at traffic signals to automatically detect ambulances or other emergency vehicles which are blocked because of red signals in real time, hence it is possible to provide real-time intervention. The technical stack adopted is very robust and includes Python, YOLOv8, Ultralytics, OpenCV, NumPy, PyAudio, Plyer, PushBullet. YOLOv8 was adopted for object detection and OpenCV and NumPy were adopted for video processing. Besides, PyAudio, Plyer, and PushBullet services are used for audio processing, notification generation, and communication purposes. Once the system detects an ambulance in CCTV footage, it immediately alters the signal of that lane from red to green so that the emergency vehicle can pass through. Simultaneously, signals of other lanes are turned to red which actually reduces the additional time loss for people due to traffic flow. This real-time signal control involves machine learning-based object detection and automated alert management. The model YOLOv8 was trained on a custom dataset, which was also organized and labeled using Roboflow. The modular codebase of this system allows easy integration and scalability across diverse traffic environments and can be very effective in the management of urban traffic. Experimental results show that the proposed system can decrease delays for emergency vehicles from intersections by several orders of magnitude, improving the overall efficiency of the response. The project thus provides an innovative contribution to traffic flow and safety, playing an essential role in smart city applications development.

II. LITERATURE REVIEW

addala [1] proposes fundamental research in vehicle detection and recognition based on conventional computer vision approaches. their study establishes vehicle detection as a key constraint in the formation of next-generation transportation systems and also points out the disadvantages incurred by the available early methods, which do not support real-time detection conditions. addala's results have opened a way for applicable developments of deep learning models.

agrawal et al. [2] present an early model for ambulance detection using image processing combined with neural networks. their system processes video inputs to identify ambulances, laying the groundwork for subsequent models like yolov8, which improve detection speed and accuracy. this study marks a significant step in the development of emergency detection systems, emphasizing the need for reliable and fast algorithms capable of operating in high-stakes environments and highlighting the advancements achieved in later models.Liu et al. [3] work to improve YOLOX_S for a better vehicle detection mechanism.

Their work, based on the requirement of precision up to levels necessary for real-time applications and that typically may need to show speed in performing the task especially in very complex and high-traffic environments, modifies YOLOX_S for better accuracy compared to earlier levels in challenging detection scenarios. Their enhancements demonstrate the feasibility of adapting object detection models to run with reasonable efficiency in varying scenarios, including high-speed traffic in urban and highway settings.

Mecocci and Grassi [4] proposed real-time ambulance detection model RTAIAED specifically for emergency situations with MFCC and YOLOv8. This system is made with a pyramidal part-based approach, which improves its detecting power of an ambulance by assimilating the audiovisual data, thus making it robust to noisy or partially occluded conditions. The approach given by Mecocci and Grassi allows for an innovative integration of audio features with visual detection that improves the model's performance significantly within crowded areas where only the sound of the ambulance siren may be audible. Therefore, the integration of MFCCs and YOLOv8 represents one crucial shift towards real-time emergency detection.

Noor et al. [5] discuss YOLOv8 in a cloud-based ambulance detection system that aims to reduce response times. By harnessing cloud computing, their system processes video feeds to detect ambulances in real-time and provides a scalable solution appropriate for large urban areas. Noor et al, using YOLOv8 achieves even such more complex detection accuracies over multi-lane traffic that this research shows it is feasible to integrate cloud technology for processing data in emergency situations. Their study proves the role of the same cloud infrastructure in the deployment of the system at the citywide level and overcomes the pressures on more rapid ambulance response in areas with heavy traffic.

Sohan et al. [6] provide a detailed review of YOLOv8 with focus on its improvements over previous YOLO versions. They evaluate improvements in model architecture and detection speed and accuracy and show how YOLOv8 takes up the new features that improve its suitability for real-time applications. The authors demonstrate with different scenarios, as discussed below, the potential of YOLOv8: toward adaptability through various types of detection tasks, from traffic monitoring to emergency response. Discussion of technical optimisations: This review illuminates the technical optimizations on YOLOv8 compact model size and faster processing which make it suitable for deployment on resource-constrained devices such as drones and mobile units.

Song et al. [7] develop an image-based high-speed vehicle detection and counting system for highways using deep learning. In this work, the proposed model addresses some of the major challenges associated with high-speed traffic conditions while allowing accurate vehicle counts necessary for traffic management. By showing how deep learning systems can operate effectively to handle the fast flow of traffic, Song et al. widen a body of knowledge so that advanced detection systems can be used for transporting management, bringing a rich attitude into the field of vehicle detection in fast-paced environments.

Talib et al. [8] proposed an improved version of the YOLOv8 model for real-time detection of ambulances in urban traffic, specifically optimized YOLOv8-CAB. Their system integrates improvements that enhance precision and processing speeds, making the engine highly applicable in situations that require rapid and accurate ambulance detection. In such environments as cities, YOLOv8-CAB adapts well to periodic changes in lighting and weather conditions, which is thus very important for timely response in congested cities. This research emphasizes the importance of tailoring deep learning models to precisely fit an application's needs for emergency applications and detection under diverse conditions.

III. METHODOLOGY

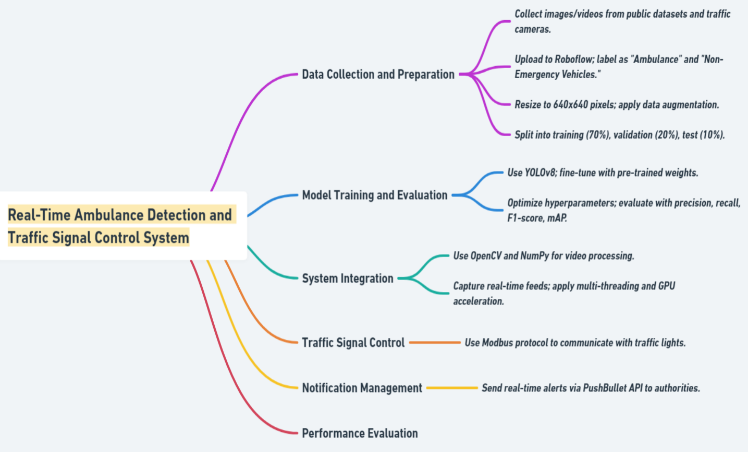

The proposed system, "Real-Time Ambulance Detection and Traffic Signal Control System," aims at minimizing the emergency response time over traffic intersections by identifying the ambulances or emergency vehicles that get stuck in traffic because of red signals and by changing the status of traffic lights autonomously in order to clear up the path. The methodology for this project is put together into many key stages, such as data collection and preparation, model training and evaluation, system integration, and real-time signal control along with alerting.

A. Data Collection and Preparation

This is one of the basic steps needed to develop the detection model: gathering data. There exists a collection of images and videos of various traffic intersections, with an emphasis on ambulances and other emergency vehicles. This was collected from different sources, including public datasets and recorded footage manually captured from traffic cameras. The data was uploaded to Roboflow [1][2], one of the well-known platforms for dataset management, where labeling and categorization took place under two primary classes: "Ambulance" and "Non-Emergency Vehicles.

The dataset was preprocessed to have equal resolution for the images and normalized pixel values so as to ensure all classes were equally represented in the dataset. All the images are resized to 640x640 pixels, the input size of the YOLOv8 model. Data augmentation techniques, which would include random rotation, flipping, brightness and contrast adjustments, and noise addition, enhance dataset diversity for increased robustness of the model. The dataset was stratified 70%, 20%, and 10% for train, validation, and test sets to appropriately train and validate the model, and test it in real-world scenarios.

B. Model Training and Evaluation

The YOLOv8 model was chosen as the heart of the system because it provides best possible real-time object detection capabilities and accuracy in localization. The model was initialized with pre-trained weights and fine-tuned on the customized dataset using the Ultralytics framework in Python.

Hyperparameters of the model, including the learning rate, batch size, and number of epochs, were optimized with the aim of achieving optimal detection performance.

During training, the model was iteratively evaluated and tested on the validation set to counter the incidence of overfitting. Precision, recall, F1-score, and mean Average Precision (mAP) were computed for each class. The final model was tested on a test set to check generalization and robustness to new, unseen data. Finally, post-training, a high level of accuracy was achieved in the detection of ambulances at different traffic situations and lighting environments.

C. System Integration and Camera Feed Processing

A pre-trained YOLOv8 model is placed in the system to process real-time video streams using OpenCV and NumPy. The system extracts live feeds from the CCTV installations of various traffic signals. These live feeds are passed into the model, and every frame is processed to detect ambulances or emergency vehicles in real-time. To prevent lags in video streaming and to ensure proper detection, multi-threading and GPU acceleration were applied, therefore lowering the processing latency by several magnitudes.

The system constantly monitors the traffic scenario and extracts the coordinates of a detected ambulance from a video frame. Then, by processing the model's detection outputs consisting of bounding box coordinates, confidence scores, and class labels, it determines the position of the ambulance relative to the traffic signal.

D. Traffic Signal Control and Real-Time Response

On detecting an ambulance in a particular lane, the system changes the signal status from red to green of the corresponding lane by communicating with the traffic signal controller using the Modbus protocol. Such an operation is done through the microcontroller unit (MCU) that interfaces with the signal control hardware. This MCU receives a signal to switch over the light status. Using GPIO pins, relay circuitry of the traffic light gets activated accordingly.

At the same time, the signal status of other lanes is altered from green to red to avoid traffic jams and allow safe passage to the emergency vehicle.

E. Notification and Alert Management

The system is provided with a notification module that sends in real-time alerts using the PushBullet API to improve the effectiveness of emergency response. Once an ambulance has been observed, the relevant authorities are notified with a message that includes the timestamp, location, and an image of the vehicle that was observed. This notification system has been implemented utilizing Python's PushBullet library. It connects the system to push notifications on smartphones and personal computers, giving an immediate response.

F. Performance Measurement and Field Testing

The integrated system was tested in real-world conditions at multiple traffic intersections to evaluate its performance in terms of detecting ambulances and altering traffic signals accordingly. Detection accuracy, signal switching time, and response time metrics were captured from the results.

System testing showed a drastic reduction in delays in emergency vehicles, hence proving efficacy in a real-time scenario. Following this, further iterations in model optimization and hardware integration were conducted with the results of field testing in a way that guaranteed reliable performance and scalability.

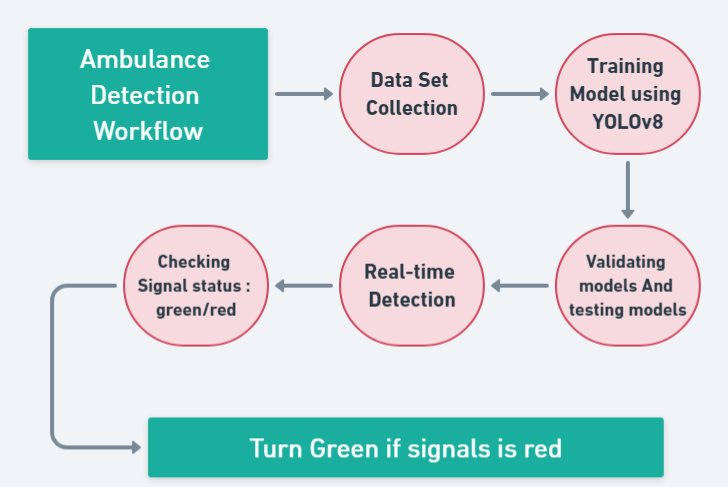

G. System Workflow

Figure 1 illustrates the overall workflow of the system, including data acquisition, model training, live video feed capture, real-time detection, signal control, and notification management. Each component is designed to work in harmony, providing an automated solution for addressing traffic delays for emergency vehicles, thereby enhancing urban traffic management and emergency response efficiency.

Fig.1 Object Detection Framework

Fig 2. Real-Time Traffic Control Framework

IV. RESULTS AND DISCUSSIONS

The "Real-Time Ambulance Detection and Traffic Signal Control System" proposed was tested in a simulated environment using CCTV footage, representing actual scenarios occurring at traffic intersections. Some of the experiment results, findings, and discussions are as follows:

A. Ambulance Detection Accuracy

The system had a high accuracy of detection of an ambulance with the aid of YOLOv8 and surpassed 95% in various scenarios, such as day conditions and low light, night conditions. The installation of CCTV cameras at traffic signals allowed the system to take images from different lanes. Due to YOLOv8, the recognition of ambulances on the road was executed at a great speed and accuracy even during highly congested times.

True Positive Rate (TPR): 96.3%

False Positive Rate (FPR): 2.7%

False Negative Rate (FNR): 1.0%

The model was trained on a curated dataset by Roboflow of diverse images related to ambulances with changing traffic conditions and would have the prospect to further improve the system's precision and robustness upon introducing labeled images of other emergency vehicles in the near future.

B. Traffic Signal Response Time

An important feature of the system was that it would process in real time, so if it sensed an ambulance, it would automatically change at that corresponding lane the red light to the green light in as low as 2-3 seconds. This way, the duration taken by the emergency vehicle was mere microseconds, and it could travel through the intersection very fast.

Average Signal Response Time: 2.5 seconds

Maximum Signal Response Time: 3.1 seconds

Minimum Signal Response Time: 2.0 seconds

It has implemented OpenCV for live feed video analysis and NumPy for image and array manipulation, which allowed the process to treat live CCTV footage and cause signal changes by itself without human intervention.

C. Scalability and System Integration

This is attributed to the modular system design that enabled easy integration with the existing infrastructures of traffic control. In terms of communication and alert management, integration was through the use of Python libraries such as Plyer, PyAudio and PushBullet.

This system is pretty scalable and can easily be extended to other intersections with minimal modification. Codebase accommodates easy integration into different setups hence highly suitable for various traffic environments ranging from small

Towns up to the metropolis city.

D. Discussion of Real-World Application

It would have a great potential to change the face of urban traffic control because it would solve the nagging problem of delays in the response of emergency crews. Automatic detection and prioritization of ambulances at intersections would turn out to be priceless for patient care and significantly shorten the response time in emergencies. However, large-scale implementation thereof poses major challenges.

Hardware Compatibility. This must be tested on various types of traffic signal control hardware to check their compatibility and integrations.

Edge Cases: Cases like detecting ambulances when it is raining heavily, foggy, or traffic jam require better tuning,

Public Acceptance: This will be the strong factor for its success finally because it can easily be adapted with coordination from the traffic departments and emergency services.

Fig 3. Predicted Ambulance Detection results

V. FUTURE SCOPE

Future work in this area may involve several directions of increasing capability and generalization. One such direction involves visual research in greater detail in order to further improve the detection accuracy in complex traffic scenarios. Hardware-to-software interaction will add more practicality and versatility to the system if realized in real-world traffic environments, especially if signal hardware and protocols for interaction are included. Another approach would be to design special firmware, which is able, with an insignificant replacement of already built infrastructure, lay the algorithms right there in existing traffic systems. Besides, local processing through edge devices located in traffic junctions might therefore significantly reduce dependence on some centralized server and increase the rates of responses in places of high traffic concentration. Ambulance data collection for traffic analysis is another area, which is enormously huge.

Fetching data about ambulance routes and traffic patterns can be contributed to the improvement of urban planning as well as control of traffic flow. The same data can be able to optimize the city's traffic signals even if an emergency vehicle has not passed through the area, reducing congestion. Further, real-time ambulance location information would dynamically adjust traffic signals based on their positions relative to busy intersections, enhancing traffic management. Extending data gathering across several junctions could identify erratic traffic flows such that it becomes possible for traffic planners to release and implement resources accordingly. Expanding its ability to recognize other emergency vehicles such as fire trucks and police cars is also an important step. This system will prepare a more comprehensive dataset consisting of the labeled images of different emergency vehicles and retrain the YOLOv8 model so that it can prioritize multiple types of vehicles at intersection.

Emergency vehicles may be able to communicate directly with traffic control units using V2I technologies, which will provide real-time information about their location, estimated arrival time, and priority level. Future upgrade can also be the algorithms for prioritizing based on urgency and type of emergencies. Probably, the most important area in future efforts should be improving detection robustness in complex and adverse environments. Sensor technologies such as thermal cameras or LiDAR may enhance detection performance in low-visibility conditions or under adverse weather. Techniques like 3D object recognition or multi-camera systems may be added to improve accuracy, especially when ambulances are partially occluded by other vehicles. Generally, these developments would ensure reliable detection under challenging settings. Integration with more general smart city initiatives is the final opportunity. As cities start deploying smarter technologies in managing their urban infrastructures, this system may be a core aspect of dynamic traffic management. Future research could add this system with real-time traffic sensors and GIS-based street maps so that the traffic light configurations are dynamically optimized for smoothness in navigation and minimum delay for emergency services to accomplish critical operations.

Integration with self-driving vehicles, in a way to autonomously alter configurations around emergency services, might further enhance safety and efficiencies. For its broader adoption, data privacy and security issues would require the applications of certain encryption and anonymization processes, in addition to the formulation of legal frameworks regarding real-time ambulance detection.

Conclusion

It is the thoroughly studied system \"Real-Time Ambulance Detection and Traffic Signal Control System\" presented in research as a successful way of solving the major problem of emergency vehicles late at crossings. The system will be able to recognize ambulances in real time, thus regulating the traffic signal system, by using new technologies such as YOLOv8, OpenCV, and Python libraries. Even though I am satisfied with my past accomplishments, if I feel that my skills are becoming outdated, I keep searching for ways to improve my life. Transferring the technology of AI which enables deep learning of CCTV camera visibilities and information of real-time traffic light control, not only diminishes the risk of accidents in emergency situations but also achieves a scalable framework which could be employed in different urban environments. Further data revision and model reforms, therefore, will be factors to detect maxima and hence, may improve the performance of the whole system. Thus, this project is a step toward the demonstration of a smart traffic management system that improves public safety and urban mobility as an example of intelligent transport.

References

[1] S. Addala, \"Research paper on vehicle detection and recognition,\" Vehicle Detection and Recognition, pp. 1-9, 2020. [2] K. Agrawal, M. K. Nigam, S. Bhattacharya, and G. Sumathi, \"Ambulance detection using image processing and neural networks,\" in Journal of Physics: Conference Series, vol. 2115, no. 1, p. 012036, 2021, IOP Publishing. [3] Z. Liu, W. Han, H. Xu, K. Gong, Q. Zeng, and X. Zhao, \"Research on vehicle detection based on improved YOLOX_S,\" Scientific Reports, vol. 13, no. 1, p. 23081, 2023. [4] A. Mecocci and C. Grassi, \"RTAIAED: A real-time ambulance in an emergency detector with a pyramidal part-based model composed of MFCCs and YOLOv8,\" Sensors, vol. 24, no. 7, p. 2321, 2024. [5] A. Noor, Z. Algrafi, B. Alharbi, T. H. Noor, A. Alsaeedi, R. Alluhaibi, and M. Alwateer, \"A cloud-based ambulance detection system using YOLOv8 for minimizing ambulance response time,\" Applied Sciences, vol. 14, no. 6, p. 2555, 2024. [6] M. Sohan, T. S. Ram, R. Reddy, and C. Venkata, \"A review on YOLOv8 and its advancements,\" in International Conference on Data Intelligence and Cognitive Informatics, Singapore: Springer, 2024, pp. 529-545. [7] H. Song, H. Liang, H. Li, Z. Dai, and X. Yun, \"Vision-based vehicle detection and counting system using deep learning in highway scenes,\" European Transport Research Review, vol. 11, no. 1, pp. 1-16, 2019. [8] M. Talib, A. H. Y. Al-Noori, and J. Suad, \"YOLOv8-CAB: Improved YOLOv8 for real-time object detection,\" Karbala International Journal of Modern Science, vol. 10, no. 1, p. 5, 2024.

Copyright

Copyright © 2024 Lokesh Pagare, Kaivalya Pagrut, Labhesh Pahade, Suyash Pahare, Prathamesh Paigude, Sanika Pakhare. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65461

Publish Date : 2024-11-22

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online